Defining uncertainty in the Israeli lottery

By Amit Grinson in R

July 9, 2020

“I have a great idea to get rich. All we need is a lot of money.”

― A meme on the internet

A while ago I was reading about Bernoulli trials and decided that I wanted to explore them further. I was wondering what would be an interesting case study for such a topic, and then it hit me (💡): Why not explore lottery probabilities? Little did I know that this topic would lead me down the geometric distribution road and help me better understand the uncertainty in lotteries. So, shall we?

The rules

We’ll be exploring the probability of winning the Israeli lottery held by the Pais organization, a well-known lottery enterprise in Israel. The ticket we’ll be discussing is applicable to the main lottery called ‘Loto’ and costs 5.8 New Israeli Shekels (NIS), approximately ~1.6 dollars.

Pais holds their lotteries twice a week with the regular prize of 5 million NIS, equivalent to $1,420,000. If there’s no winner for a given week, the prize accumulates to the following lottery. For the sake of the post I’ll only discuss winning a first place prize (be the only winner). In addition I won’t be discussing the effect of prize increase on when to participate.

When filling out a lottery form you choose 6 numbers in the range of 1–37 and a ‘strong’ number in the range of 1–71. In order to win first place you have to get guess correctly both the 6 number set and the strong number. Luckily, the order of the 6 numbers doesn’t matter, and therefore if you wrote or you’re good on both (Also known as combinations, more on that in a minute).

Another ‘luck’ in our favor is that in each lottery ticket we have the option to fill out two sets of numbers, thus doubling our odds of winning. I’m assuming we’re on the same page and you won’t use both of your attempts to guess the same sets of numbers, so that somewhat increases our odds of winning. Speaking of odds, let’s have a look at them.

The odds

To understand the lottery probabilities, we need to calculate the probability of guessing a combination of 6 numbers out of 37 options along with one strong number out of 7 possible numbers. In order to do this, we can turn to combinations:

In mathematics, a combination is a selection of items from a collection, such that (unlike permutations) the order of selection does not matter.

― Wikipedia

That’s exactly what we need. We want to calculate the probability of randomly guessing six numbers regardless of their order; If the order was important we’d want to look at permutations. In addition, each number is drawn without replacement, and therefore there can’t be repetition of the same number.

The formula to calculate combinations is as follows: Where is the number of options to choose from and is the number of choices we make.

Inputting our numbers we get , and now we just calculate away. However, don’t forget we also need to guess another number out of 7 possible numbers (the strong one), so we’ll multiply our outcome by , yielding a probability of . ‘Luckily’ we choose two sets of numbers in a given ticket, so we multiply the probability by 2.

Therefore, the probability of winning the lottery is $p = \frac{1}{8136744}$.

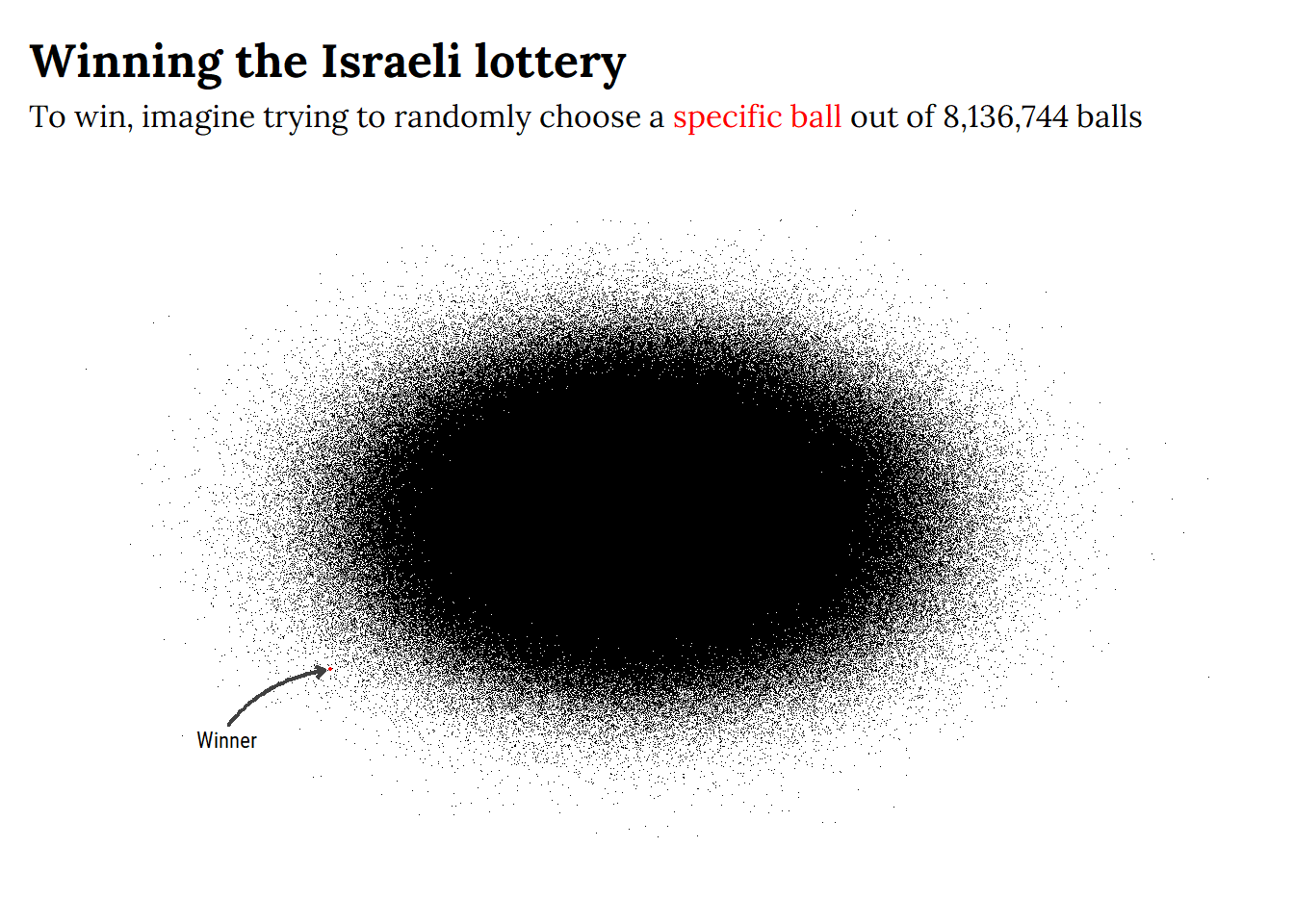

Wow! that’s a very low probability. How low? Let’s try and visualize it.

Sometimes when we receive a probability it’s hard to grasp the odds and numbers thrown at us. Therefore, I’ll try to visualize it for us. Imagine there’s a pool filled with 8,136,744 balls. One of those balls is red and choosing that exact red ball blindly will win you the lottery:

Code

library(ggplot2)

library(dplyr)

library(scattermore)

library(ggtext)

set.seed(123)

df_viz <- data.frame(x = rnorm(8136743, mean = 1000, sd = 1000),y = rnorm(8136743))

point_highlight <- data.frame(x = -1811.674, y = -2.268505588)

lot_p <- ggplot()+

geom_scattermost(df_viz, pointsize = 0.1, pixels = c(2000,2000))+

geom_point(data = point_highlight, aes(x = x, y = y), size = 0.3, color = "red")+

annotate(geom = "curve", x = -2750, xend = -1860, y = -3.10, yend = -2.29,

curvature = -.2, color = "grey25", size = 0.75, arrow = arrow(length = unit(1.5, "mm")))+

annotate("text" ,x = -2750, y = -3.30, label = "Winner", family = "Roboto Condensed", size = 3)+

labs(title = "Winning the Israeli lottery", subtitle = "To win, imagine trying to randomly choose a <b><span style='color:red'>specific ball</span></b> out of 8,136,744 balls")+

theme_void()+

theme(text = element_text(family = "Lora"),

plot.title.position = "plot",

plot.title = element_text(size = 18, face= "bold"),

plot.subtitle = element_markdown(size = 12),

plot.margin = margin(4,2,2,4, unit = "mm"))

Not easy is it?

Now that we know the probability of winning at each attempt, let’s see how it manifests across multiple attempts.

Multiple attempts - Geometric distribution

A Bernoulli trial is a random experiment with exactly two outcomes - such as success\failure, heads\tails - in which the probability for each outcome is the same every time (Wikipedia). This sets the ground for discussing an outcome of a lottery in which you either win or lose.

But we want to learn more about the distribution of attempts, and this brings us to the geometric distribution. A Geometric distribution enables us to calculate the probability distribution of a number of failures before the first success2.

Before we begin, we must meet several conditions to use the geometric distribution:

✔️ Each trial is independent from one another - succeeding in one trial doesn’t affect the next trial. We know this is true since winning in one lottery won’t affect your chances of winning the next round.

✔️ Every trial has an outcome of a success or failure. This assumption is true in our case where each lottery you participate in you either win or lose.

✔️ The probability of success is the same every trial - This is also true given the lottery probabilities are consistent across each game.

Now that we got the technicalities out of the way we can start exploring some of the uncertainty surrounding the lottery.

Winning at a given trial

We can denote the probability of winning as , which in the case of a lottery game is equal to . What if we wanted to know the probability of winning the lottery on the third try? That means we need two failures and then a success. If the probability of success - guessing the correct numbers - is , so the probability of a failure is , in this case . In order to win the lottery on the third try, this means getting two failures and then a success, resulting in a total of attempts. Thus, the probability of winning on the third attempt is as follows:

, equaling . In other words there’s a ~0.0000123% chance we’ll win the lottery exactly on the third try.

Generalizing, the probability distribution of the number of Bernoulli trials needed to get one success on the th trial is: . We can break this up according to our previous example:

-

stands for the probability of getting our value on the attempt. Meaning, we want to win the lottery only on the third attempt.

-

So our first two attempts should be a failure, thus a probability of multiplied by two (two rounds of failures), written as .

-

Lastly, stands for the probability of succeeding, occurring exactly on the attempt.

The probability we just discussed is also known as the probability mass function (PMF) of the geometric distribution. PMF is a function that gives the probability that a random discrete variable is exactly equal to some value. In our above example, the probability that we’ll win exactly on the third try.

Winning by a given trial

We don’t necessarily want to win the lottery on on a specific attempt, but explore the probabilities of winning by the th attempt. Reframing our previous question we can ask “what is the probability of winning the lottery on at least one of the first 3 attempts?”, bringing us to the Cumulative distribution function (CDF). In a cumulative distribution we calculate the probability that will take a value less than or equal to (in our case representing the number of attempts).

How does this question change our calculation?

Let’s assume we’re still talking about 3 attempts. Our new framed question means we want to win the lottery either on the first attempt, the second or the third. In other words, we want to add the probability of success when + + . Given that our probability of failure is , we can write the argument as follows: , inputting our values of , resulting in the probability of winning in one of the first three attempts , also written as a 0.0000368% chance.

But if we want to look at the first 50 attempts? we’ll have to sum each individual PMF?

Here’s exactly the use of the geometric CDF written as . We raise the probability of losing to the power of attempts to win by and deduct it from 1, resulting in the probability of winning by a given trial.

Winning on the first X trials

We just looked at the probability of winning on the first 3 trials, and now that we learned about the CDF we can calculate the probability of winning on the first trials, for e.g. on the first 100, 1000 and so on. In addition, another important factor we can take into account exploring the cumulative distribution is the money spent reaching each attempt.

We’ll start by declaring our values. We know the probability for winning the lottery with each ticket we have is (remember, we get to choose two sets of numbers in each ticket), so let’s declare that:

p <- 1/8136744

Next we know the probability for not winning is :

q <- 1 - p

Now we can create a data frame to account for some 250,000 attempts. We don’t need each attempt so we’ll simulate data for the first 50,000 and then have points spread out in a 500 interval jump all the way to the 100,000,000 attempt.

df_prob <- tibble(trial = c(1:50000, seq(50000, 1e8, 500)))

Once we have that we can calculate both the probability of winning up to a specific attempt and the cumulative amount of money spent reaching there (according to a price ticket of NIS 5.8):

df_prob <- df_prob %>%

mutate(cdf = 1 - (q)^trial,

money_spent = trial * 5.8)

head(df_prob)

## # A tibble: 6 × 3

## trial cdf money_spent

## <dbl> <dbl> <dbl>

## 1 1 0.000000123 5.8

## 2 2 0.000000246 11.6

## 3 3 0.000000369 17.4

## 4 4 0.000000492 23.2

## 5 5 0.000000614 29

## 6 6 0.000000737 34.8

Looks good!

We see our top 6 observations with 3 columns we just defined (from left to right): the lottery raffle (trial), the probability of winning at a given trial until that point (cdf) and the money spent by that trial. Our probability of winning at any trial is constant ($p$), so it’ll be redundant to add that in here.

Now let’s look at specific points along our data frame and see how much money is spent reaching there. More specifically, let’s look at the details of some attempts such as 1; 10; 100; 1000; 2500, 5000, , 1,000,000, 10,000,000; 50,000,000:

| Lottery probabilities with the geometric distribution | ||

|---|---|---|

| Lottery probabilities winning by a given attempt, the money spent reaching there and your chances of winning by then | ||

| 1 Money spent (rounded up) corresponds to the cumulative number of attempts played |

In the above table I printed specific observations along the lottery’s cumulative geometric distribution. In our left column we have the trial number, next the approximate amount of money spent up to that trial and lastly the percent of winning by that given trial. Notice that I converted the New Israeli Shekels to dollars ($1 dollar = ~ NIS 3.5).

If we played 100 consecutive games with the same number, we would spend 166 dollars by that point and have only a 0.00123% chance of winning. We only pass the 1% (!) chance of winning after buying more than 100,000 tickets, spending a total of $165,714 dollars.

To pass the 10% chance of winning you’d have to play 1,000,000 games and spend ~1,600,000 dollars! Reminder: the default prize is only some $1,412,000!

Average number of attempts

An interesting feature of the geometric distribution is that we can calculate the expected number of attempts and variance of the distribution. The expected number of attempts in the discussed geometric cumulative distribution is with a variance of . is basically the expected value for the number of independent trials needed to get the first success (Think of it as an average, only theoretically). So in our lottery example the expected number of attempts to reach a success is , resulting in 8,136,744 attempts.

R has built in functions for working with the geometric distribution such as pgeom, rgeom, qgeom and dgeom which you can explore more

here. For the purpose of exploring the mean we can use the rgeom function which generates a value representing the number of failures before a success occurred. For example, let’s see how many failures we’re required to reach one success:

rgeom(n = 1,p = p)

## [1] 1033084

In the above example rgeom takes the number of rounds (n = 1) and the probability of winning (p = p). The outputted value indicates the number of failures before our success.

Using this we can calculate the average number of attempts from 2,000,000 games:

mean(rgeom(2e6, p))

## [1] 8129074

Pretty close to our expected value!

So what does the mean in terms of the lottery? You’d have to play approximately 8,136,744 games to win the lottery, spending a total of NIS 47,193,115 (~$13,483,747) to win approximately NIS 5M (~1.42M dollars)!

Conclusion

In this post we were able to uncover and better understand some of the uncertainty that covers a lottery game. Using the geometric distribution we explored the probability of winning the lottery at a specific event, and winning it in the form of a cumulative distribution - Chances of winning up to a given trial.

Unfortunately, the numbers aren’t in our favor. You’d find yourself spending a great deal of money before actually winning the lottery. I’m definitely not going to tell you what to do with your money, but I hope this blog post helped you understand a little better the chances of (not) winning the lottery. But hey, apparently a New Yorker won the lottery after participating each week for 25 years so you never know.

Further reading \ exploring

Three resources I found extremely valuable in learning more about the geometric distribution:

-

The Geometric distribution Wikipedia’s page. I’m constantly amazed at the vast amount and well articulated statistical pages they have.

-

Continuing on that, I found the resource that the Wikipedia page relies on extremely helpful: “A modern introduction to probability and statistics : understanding why and how”.

-

If you’re more of a video kind of person, I highly recommend a video by The Organic Chemistry Tutor about the Geometric distribution. I think he does a superb job in explaining different various statistical analysis and always enjoys his videos.

Notes

-

An amusing anecdote is that the Pais organization offers information about ‘Hot’ numbers and the frequency of appearance for each number. Considering that the lottery is random I wouldn’t rely on such a pattern… ↩︎

-

In this blog post I only explore one aspect of the geometric PMF by looking at number of failures before the first success in a set of attempts. To read more about the PMF I recommend starting with the Wikipedia page of the Geometric distribution. ↩︎